metafacture-tutorial

Lesson 2: Introduction into Metafacture Flux

To perform data processing with Metafacture transformation workflows are configured with Metafacture Flux, a domain-specific scripting language (DSL). With Metafacture Flux we combine different modules for reading, opening, transforming, and writing data sets.

In this lesson we will learn about Metafacture Flux, what Flux workflows are and how to combine different Flux modules to create a workflow in order to process datasets.

Getting started with the Metafacture Playground

To process data Metafacture can be used with the command line, as JAVA library or you can use the Metafacture Playground.

For this introduction we will start with the Playground since it allows a quick start without additional installing. The Metafacture Playground is a web interface to test and share Metafacture workflows. The commandline handling will be subject in lesson 6

In this tutorial we are going to process structured information. We call data structured when it organised in such a way that is easy processable by computers. Literary text documents like War and Peace are structured only in words and sentences, but a computer doesn’t know which words are part of the title or which words contain names. We had to tell the computer that. Today we will download a book record in a structured format called JSON and inspect it with Metafacture.

Flux Workflows

Lets jump to the Playground to learn how to create workflows:

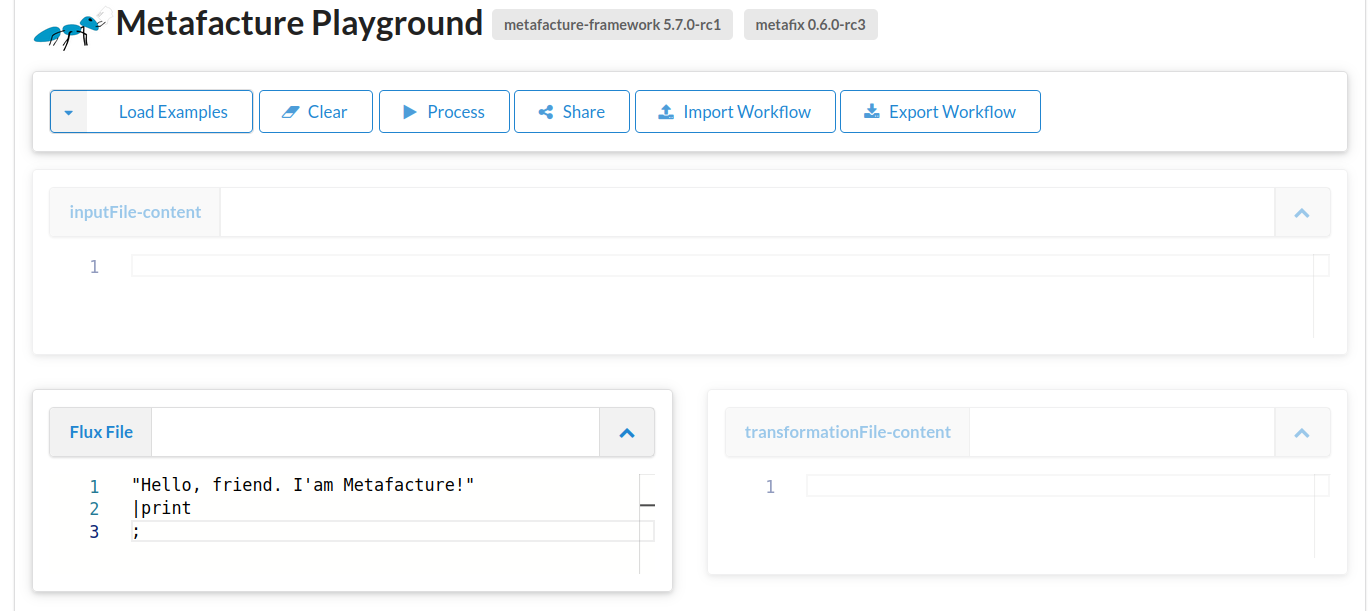

See the window called Flux?

Copy the following short code sample into the playground:

"Hello, friend. I'am Metafacture!"

|print

;

Great, you have created your first Metafacture Flux Workflow. Congratulations!

Now you can press the Process-Button ( ) or press Ctrl+Enter to execute the workflow.

) or press Ctrl+Enter to execute the workflow.

See the result below? It is Hello, friend. I'am Metafacture!.

But what have we done here?

We have a short text string "Hello, friend. I'am Metafacture". That is printed with the modul print.

A Metafacture Workflow is nothing else as a incoming text string with multiple moduls that do something with the incoming string. But the workflow does not have to start with a text string but also can be a variable that stands for the text string and needs to be defined before the workflow. As this:

INPUT="Hello, friend. I'am Metafacture!";

INPUT

|print

;

Copy this into the window of your playground or just adjust your example.

INPUT as a varibale is defined in the first line of the flux. And instead of the text string, the Flux-Workflow starts just with the variable INPUT without ".

But the result is the same if you process the flux.

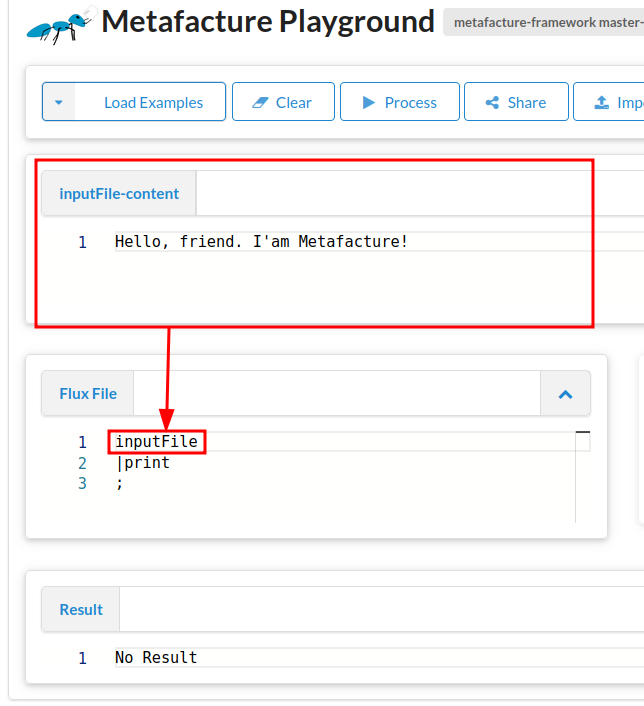

Often you want to process data stored in a file.

The playground has an input area called ìnputFile-content. In this text area you can insert data that you have usually stored in a file. The variable inputFile can be used at the beginning of the workflow and it refers to the input file represented by the ìnputFile-content-area.

e.g.

So lets use inputFile instead of INPUT and copy the value of the text string in the Data field above the Flux.

Data for inputFile-content:

Hello, friend. I'am Metafacture!

Flux:

inputFile

|print

;

Oops… There seems to be unusual output. Its a file path. Why?

Because the variable inputFile refers to a file (path).

To read the content of the file we need to handle the incoming file path differently.

(You will learn how to process files on your computer in lesson 06 when we show how to run metafacture on the command line on your computer.)

We need to add two additional Metafacture commands: open-file and as-lines

Flux:

inputFile

| open-file

| as-lines

| print

;

The inputFile is opened as a file (open-file) and then processed line by line (as-line).

You can see that in this sample.

We usually do not start with any random text strings but with data. So lets play around with some data.

Let’s start with a link: https://openlibrary.org/books/OL2838758M.json

You will see data that look like this:

{"publishers": ["Belknap Press of Harvard University Press"], "identifiers": {"librarything": ["321843"], "goodreads": ["2439014"]}, "covers": [413726], "local_id": ["urn:trent:0116301499939", "urn:sfpl:31223009984353", "urn:sfpl:31223011345064", "urn:cst:10017055762"], "lc_classifications": ["JA79 .S44 1984", "HM216 .S44", "JA79.S44 1984"], "key": "/books/OL2838758M", "authors": [{"key": "/authors/OL381196A"}], "ocaid": "ordinaryvices0000shkl", "publish_places": ["Cambridge, Mass"], "subjects": ["Political ethics.", "Liberalism.", "Vices."], "pagination": "268 p. ;", "source_records": ["marc:OpenLibraries-Trent-MARCs/tier5.mrc:4020092:744", "marc:marc_openlibraries_sanfranciscopubliclibrary/sfpl_chq_2018_12_24_run01.mrc:195791766:1651", "ia:ordinaryvices0000shkl", "marc:marc_claremont_school_theology/CSTMARC1_barcode.mrc:137174387:3955", "bwb:9780674641754", "marc:marc_loc_2016/BooksAll.2016.part15.utf8:115755952:680", "marc:marc_claremont_school_theology/CSTMARC1_multibarcode.mrc:137367696:3955", "ia:ordinaryvices0000shkl_a5g0", "marc:marc_columbia/Columbia-extract-20221130-001.mrc:328870555:1311", "marc:harvard_bibliographic_metadata/ab.bib.01.20150123.full.mrc:156768969:815"], "title": "Ordinary vices", "dewey_decimal_class": ["172"], "notes": {"type": "/type/text", "value": "Bibliography: p. 251-260.\nIncludes index."}, "number_of_pages": 268, "languages": [{"key": "/languages/eng"}], "lccn": ["84000531"], "isbn_10": ["0674641752"], "publish_date": "1984", "publish_country": "mau", "by_statement": "Judith N. Shklar.", "works": [{"key": "/works/OL2617047W"}], "type": {"key": "/type/edition"}, "oclc_numbers": ["10348450"], "latest_revision": 16, "revision": 16, "created": {"type": "/type/datetime", "value": "2008-04-01T03:28:50.625462"}, "last_modified": {"type": "/type/datetime", "value": "2024-12-27T16:46:50.181109"}}

This is data in JSON format. But it seems not very readable.

But all these fields tell something about a publication, a book, with 268 pages and title Ordinary Vices by Judith N. Shklar.

Let’s copy the JSON data into our ìnputFile-content field. And run it again.

The output in result is the same as the input and it is still not very readable.

Lets turn the one line of JSON data into YAML. YAML is another format for structured information which is a bit easier to read for human eyes. In order to change the serialization of the data we need to decode the data and then encode the data.

Metafacture has lots of decoder and encoder modules for specific data formats that can be used in an Flux workflow.

Let’s try this out. Add the module decode-json and encode-yaml to your Flux Workflow.

The Flux should now look like this:

Flux:

inputFile

| open-file

| as-lines

| decode-json

| encode-yaml

| print

;

When you process the data our book record should now look like this:

---

publishers:

- "Belknap Press of Harvard University Press"

identifiers:

librarything:

- "321843"

goodreads:

- "2439014"

covers:

- "413726"

local_id:

- "urn:trent:0116301499939"

- "urn:sfpl:31223009984353"

- "urn:sfpl:31223011345064"

- "urn:cst:10017055762"

lc_classifications:

- "JA79 .S44 1984"

- "HM216 .S44"

- "JA79.S44 1984"

key: "/books/OL2838758M"

authors:

- key: "/authors/OL381196A"

ocaid: "ordinaryvices0000shkl"

publish_places:

- "Cambridge, Mass"

subjects:

- "Political ethics."

- "Liberalism."

- "Vices."

pagination: "268 p. ;"

source_records:

- "marc:OpenLibraries-Trent-MARCs/tier5.mrc:4020092:744"

- "marc:marc_openlibraries_sanfranciscopubliclibrary/sfpl_chq_2018_12_24_run01.mrc:195791766:1651"

- "ia:ordinaryvices0000shkl"

- "marc:marc_claremont_school_theology/CSTMARC1_barcode.mrc:137174387:3955"

- "bwb:9780674641754"

- "marc:marc_loc_2016/BooksAll.2016.part15.utf8:115755952:680"

- "marc:marc_claremont_school_theology/CSTMARC1_multibarcode.mrc:137367696:3955"

- "ia:ordinaryvices0000shkl_a5g0"

- "marc:marc_columbia/Columbia-extract-20221130-001.mrc:328870555:1311"

- "marc:harvard_bibliographic_metadata/ab.bib.01.20150123.full.mrc:156768969:815"

title: "Ordinary vices"

dewey_decimal_class:

- "172"

notes:

type: "/type/text"

value: "Bibliography: p. 251-260.\nIncludes index."

number_of_pages: "268"

languages:

- key: "/languages/eng"

lccn:

- "84000531"

isbn_10:

- "0674641752"

publish_date: "1984"

publish_country: "mau"

by_statement: "Judith N. Shklar."

works:

- key: "/works/OL2617047W"

type:

key: "/type/edition"

oclc_numbers:

- "10348450"

latest_revision: "16"

revision: "16"

created:

type: "/type/datetime"

value: "2008-04-01T03:28:50.625462"

last_modified:

type: "/type/datetime"

value: "2024-12-27T16:46:50.181109"

This is better readable, right?

But we cannot only open the data we have in our inputFile-content field, we also can open stuff on the web:

Instead of using inputFile lets read the book data which is provided by the URL from above:

Clear your playground and copy the following Flux workflow:

"https://openlibrary.org/books/OL2838758M.json"

| open-http

| as-lines

| decode-json

| encode-yaml

| print

;

The result in the playground should be the same as before but with the module open-http you can get the text that is provided via an URL.

Let’s take a look what a Flux workflow does. The Flux workflow is combination of different moduls to process incoming structured data. In our example we have different things that we do with these modules:

- First, we have a URL as an input. The URL state the location of the data on the web.

- Second, We tell Metafacture to request the stated url using

open-http. - Then how to handle the incoming data: since the JSON is written in one line, we tell Metafacture to regard every new line as a new record with

as-lines - Afterwards we tell Metafacture to

decode-jsonin order to translate the incoming data as json to the generic internal data model that is called metadata events - Then Metafacture should serialize the metadata events as YAML with

encode-yaml - Finally, we tell MF to

printeverything.

So let’s have a small recap of what we done and learned so far.

We played around with the Metafacture Playground.

We learned that Metafacture Flux Workflow is a combination modules with an inital text string or an variable.

We got to know different modules like open-http, as-lines. decode-json, encode-yaml, print

More modules can be found in the documentation of available flux commands.

Now take some time and play around a little bit more and use some other modules.

1) Try to change the Flux workflow to output as formeta (a metafacture specific data format) and not as YAML. 2) Configure the style of formeta to multiline. 3) Also try not to print but to write the output to a file called book.xml.

Click to see the new workflow

```default "https://openlibrary.org/books/OL2838758M.json" | open-http | as-lines | decode-json | encode-formeta(style="multiline") | write("book.xml") ; ```What you see with the modules encode-formeta and write is that modules can have further specification in brackets.

These can eiter be a string in "..." or attributes that define options as with style=.

One last thing you should learn on an abstract level is to grasp the general idea of Metafacture Flux workflows is that they have many different moduls through which the data is flowing. The most abstract and most common process resemble the following steps:

→ read → decode → transform → encode → write →

This process is one that transforms incoming data in a way that is changed at the end. Each step can be done by one or a combination of multiple modules. Modules are small tools that do parts of the complete task we want to do.

Each modul demands a certain input and give a certain output. This is called signature. e.g.:

The fist modul open-file expects a string and provides read data (called reader).

This reader data can be passed on to a modul that accepts reader data e.g. in our case as-lines.

as-lines outputs again a string, that is accepted by the decode-json module.

If you have a look at the flux modul/command documentation then you see under signature which data a modul expects and which data it outputs.

The combination of moduls is a Flux workflow.

Each module is separated by a | and every workflow ends with a ;.

Comments can be added with //.

See:

//input string:

"https://openlibrary.org/books/OL2838758M.json"

// MF Workflow:

| open-http

| as-lines

| decode-json

| encode-formeta(style="multiline")

| write("test.xml")

;

Exercise

1) Try to prettyprint the book record in JSON.

Answer

Add the option: `prettyPrinting="true"` to the `encode-json`-command.1) Have a look at documentation of decode-xml what is different to decode-json? And what input does it expect and what output does it create (Hint: signature)?

Answer

The signature of `decode-xml` and `decode-json` is quiet differnet. `decode-xml`: signature: Reader -> XmlReceiver `decode-json`: signature: String -> StreamReceiver Explanation: `decode-xml` expects data from Reader output of `open-file` or `open-http`, and creates output that can be transformed by a specific xml `handler`. The xml parser of `decode-xml` works straight with read content of a file or a url. `decode-json` expects data from output of a string like `as-lines` or `as-records` and creates output that could be transformed by `fix` or encoded with a module like `encode-xml`. For the most decoding you have to specify how (`as-lines` or `as-records`) the incoming data is read.2) Fill out the blanks in the metafacture workflow to transform some local Pica-Data to YAML.

Answer

[See here3) Collect MARC-XML from the Web and transform them to JSON

Answer

See hereAs you surely already saw I mentioned transform as one step in a metafacture workflow.

But aside from changing the serialisation we did not play around with transformations yet. This will be the theme of the next session.

Next lesson: 03 Introduction into Metafacture-Fix